Todays topic is VMware Cloud Director inter-tenant routing with a NSX-T backed provider VDCs (pVDC). The reason for writing this post is that some use-cases require routed connectivity between Org VDCs that may or may not be part of the same Org. Due to the multi-tenant nature of VCD, most external connectivity options (with NSX-T backed pVDCs) are focused on internet and private link connectivity.

Sometimes it’s necessary to communicate with networks in other Orgs or Org VDCs without using the sharing option. So in a nutshell this post is about inter Org / Org VDC routing.

First let’s start with an simple use-case of inter-tenant routing: A customer has some of it’s central IT services like ADDS, LDAP, DNS, NTP and so on, running in Org1. A separate team builds and manages a stack of applications in Org2 which needs services running in Org1 over a private link.

Routing options

Before digging deeper into the matter it’s important to know which routing options are available for tenants in the current VCD version (10.1) in combination with NSX-T 2.5 These are:

- Routed network using a shared T0

- Routed network using a dedicated T0

- Routing using IPSec VPN

- Routing using an Imported network

From the list above only the “Routed network using a shared T0” itself is not suitable for inter-tenant routing, since it does not support Route Advertisement of connected segments. Only the IPSec feature makes it possible to be able to route between tenants. That’s why the VPN option is listed and discussed separately in this post.

Important to note is that only the Service Provider (SP) is able to configure the external facing (T0 Uplink) networks (including the routing configuration) which are needed for any external communication.

Routing options overview

First let’s explain the main difference between all routing options available to tenants. Take into account that a single NSX Manager can control up to 160 T0 routers and 4000 T1 routers in total. A single T0 can support up to 400 T1s

| Routed (Shared T0) | Routed (Dedicated T0) | VPN | Imported | |

| Primary purpose | Internet connectivity | WAN / MPLS | WAN | Custom |

| Self-service via VCD portal | Yes | Yes | Yes | No |

| NAT required | Yes | No | No | No |

| Supports Route Advertising | No | Yes | No | Yes |

| Supports BGP | No | Yes | No | Yes |

| Dedicated T0 per Org VDC required | N/A | Yes | No | No |

| Features | o | + | N/A | ++ |

| Routing Scalability | N/A | + | o | ++ |

VCD tenant routing primer

Before describing each of the 4 options more in-depth, I’ll assume basic NSX-T and routing knowledge is available.

For a good overview of all the NSX-T 2.5 capabilities in combination with VCD, read Tomas Fojta his post on VMware Cloud Director 10.1: NSX-T Integration.

Routed (Shared)

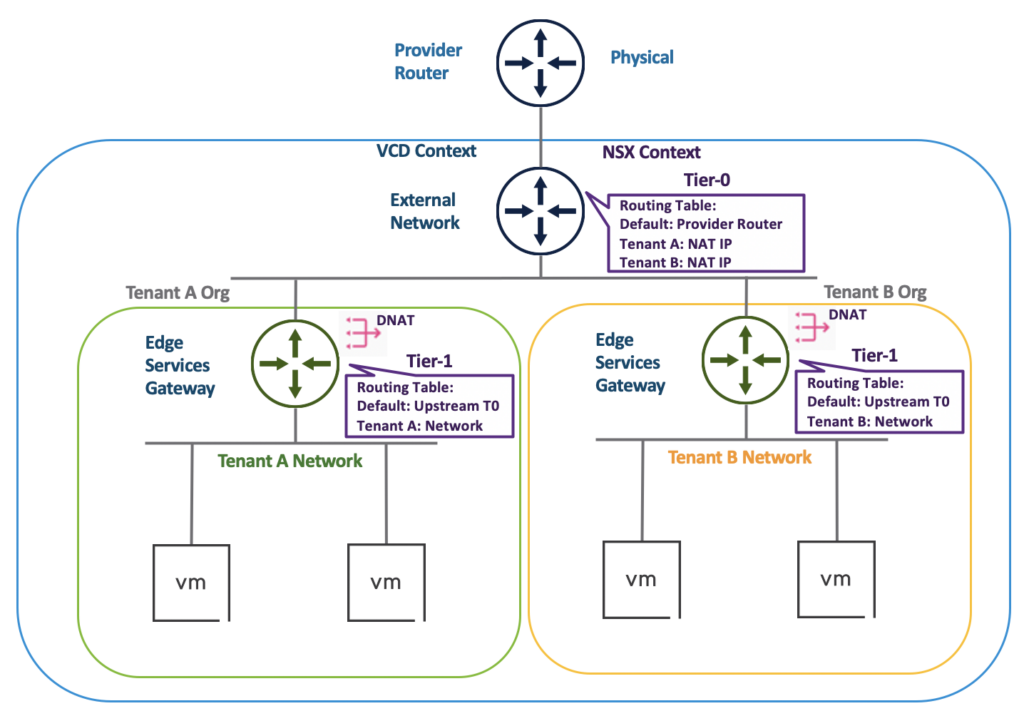

The shared routed option is probably the most used option for tenant upstream (mostly internet) connectivity. In this case the SP creates a shared T0 in NSX Manager, assigns it a pool of public routable IP adresses and adds it to VCD as an “External Network”. Secondly the SP creates a new Edge Gateway in VCD (which is a T1 under the hood) for the Org VDC, assigns it to the shared T0 and configures one or more public IPs from the T0 IP pool.

Tenants can create one or more routed networks and connect them to the Edge Gateway (T1). Now VMs are able to connect to the internet by using NAT and firewall rules. In this case SNAT rules need to be configured for outbound traffic and DNAT rules inbound traffic. Tenants can only use private IP space for their networks connected to the Edge Gateway. The tenant networks which are connected to the Edge Gateway will not be advertised to the upstream T0. Only the configured DNAT IP addresses will be advertised upstream and will be part of the T0 routing table.

For all subsequent tenants a Edge Gateway (T1) is created and connects to the same shared T0. Connectivity is configured the same way as above.

Routed (Dedicated)

The dedicated routed option is intended to provide connectivity to the tenants onprem or public cloud network over WAN or MPLS based links. In this case the SP creates a dedicated T0 in NSX Manager, assigns it a pool of (customer provided) IP adresses and adds it to VCD as a “Dedicated External Network”. Secondly the SP creates a new Edge Gateway in VCD (which is a T1 under the hood) for the Org VDC and assigns it to the dedicated T0. If needed, the SP can configure one or more public IPs from the T0 IP pool. When advertising connected Org VCD networks only, this is not necessary.

Only one Edge Gateway can be connected to a T0 marked as “Dedicated External Network”. By having this 1:1 relation of a T1 to a T0, Route Advertisement of connected tenant networks and the use of BGP is available. The downside of having dedicated T0s for every tenant is that those have to be backed by their own Edge nodes. At scale this can become very resource intensive on the Edge Cluster. Secondly, having lots of Edge nodes could raise the administrative effort in the operation teams considerable.

An imported thing to note it that in de default configuration only the SP is able to configure the BGP configuration, not tenants. This behavior can be changed by the SP using VCD Rights Bundles.

VPN

The IPSec based VPN option is intended to provide L3 connectivity to the tenants onprem and public cloud network (mostly) via the internet and requires a routed (shared or dedicated) Edge Gateway. The advantage of the VPN options is that the tenant can configure it in a self-service way.

The IPSec VPN is currently only supports a policy based configuration in VCD. This can be a limitation in certain situations if Route Advertisement of connected tenant networks and the use of BGP is needed.

Imported

Probably the “Imported” option is added because the NSX-T integration is currently not up-to-par with V. Despite the differences in features, I really like the “Imported” option because is gives the SP the ability to use all the NSX-T bells and whistles for tenants. Not only the NSX-T native ones, but also partner integrations. VCD leaves the whole segment configuration to NSX Manager. In NSX-V backed pVDCs the opposite is true. All NSX configuration should be performed by VCD to prevent inconsistencies.

The “Imported” option uses pre-created NSX segments. During the segment import which only the SP can perform, you need to provide the usual parameters like name and gateway CIDR and IP pool. Once imported, all firewall, routing and partner services are available for the SP to configure through NSX Manager.

Despite that tenant cannot manage “Imported” networks itself, the feature provides lots of options like, Route Advertisement of connected tenant networks, BGP, Route-based VPN, IDS / IPS and so on.

Inter-tenant routing use-case

Now that you’re up-to-speed on the VCD network related options, it’s time to dive into the main goal of this post, which is inter-tenant routing.

Customer requirements

- Scalable solution for up to 100+ Org VDCs, belonging to 25+ Orgs

- 3 types of routers per Org VDC for different traffic types

- Router type 1: Internet access and application publishing (including Load Balancing)

- Router type 2: Generic inter Org (VDC) communication

- Router type 3: Security and central services related inter Org (VDC) communication

- Ability to use Distributed firewalling (DFW) for all traffic types

- Ability to use IDS / IPS for certain traffic types

Design choices

Based on customer requirements mentioned above, Imported networks will be used for the segments connected to the 3 types of T1 routers mentioned above. Using Imported network is the only way the Distributed firewalling (DFW) and IDS / IPS features can be used in the design, since these cannot be managed by VCD 10.1 natively.

So routing wise, which features of the current VCD release could be used (and why) if DFW and IDS / IPS would not be necessary.

Routed (Shared)

The use of shared routed networks is a viable solution for router type 1. Applications that need to be exposed to the internet can use NAT and Edge based firewalling. In this case the NAT enabled public IP adresses on the T1 are advertised to the T0. Route Advertisement of connected segments is not needed and not supported in this case.

From the scalability pespective, the use of shared routed networks is also a good solution. A single T0 can easily handle 100+ T1 routers. Up to 400 in fact, according to the VMware ConfigMax.

For router types 2 and 3 the use of shared routed networks are not suited since Route Advertisement of connected segments is not supported.

Routed (Dedicated)

For all router types the use of dedicated routed networks is not a suitable solution because in this situation the scalability is limited and increases operational effort.

From the scalability perspective, every dedicated routed network requires its own T0. Since the NSX-T system limit is 160 and the requirements says that the solution must scale up to 100+, this is a no go.

The second reason not to use this option, is the fact that Route Advertisement of connected segments will work, but will not automatically exchange the routes between the T0 routers. The exchange of routes must be additionally configured on the uplinks of all T0 routers.

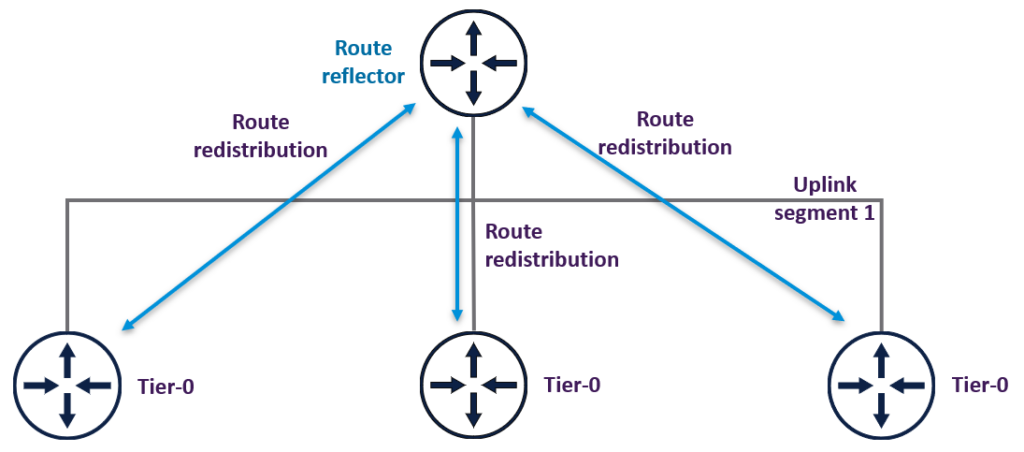

To solve this issue at scale the use of route reflectors could be great solution. Using a route reflector you only need to peer once (using BGP) to a set of route reflectors and not between all of 100+ T0 routers. This reduces the amount of BGP peerings and as a result the administrative overhead.

To summarize, the dedicated routed network is not an option because:

- Requires a 1:1 relation with a T0. The NSX system limit is 160 T0 routers. With over 100 Org VDCs this limit is to near.

- Routing at this scale would require the use of route reflectors. Else every T0 would need to peer to all other T0 routers.

VPN

To handle 100+ Org VDC with 3 (T1) routers each, requires a scalable solution with low administrate effort. This opts against the use of VPN.

Configuring and managing the required amount of policy based VPN is just not a feasible solution. It’s a bit like configure BGP peering between all of the T0s in the previous section. Up to 5 interconnected tenants, this probably works just fine.

Since VCD currently only support policy based VPNs, this causes an operations nightmare, since dynamic (BGP) routing cannot be used. For example when you would need to change assigned IP ranges to one or more Org VDCs, it could become quite a hassle.

On the positive side, VPN connections can be used in a self-service way and are available for shared and dedicated routed networks in VCD.

Imported

This leaves the use of Imported segments, which is a feature I like very much. This give the SP the choice to use all the NSX-T feature that are needed. The downside for tenants is that only the SP can perform changes to this type of network. Self-service options are limited this way.

For this use-case Imported segments were the way to go for scalability reasons and the use of specific features which are currently not supported in VCD.

To summarize, the choice for using Imported segments was made because of:

- Only a single T0 is needed for every type of router. This prevents hitting the NSX limit of 160 T0s.

- Automated dynamic routing at scale between all T1s through a single T0

- Route Advertisement of connected tenant networks

- Administrative effort is minimized this way

- The NSX limits of 400 T1s per T0 or 4000 T1s overall is not touched by far.

- Load Balancing on router type 1 is possible

- Distributed firewall is possible

- IDS / IPS for E-W is possible

To Conclude

My goal with this post is two-fold. The first goal is showing you in basis the possibilities and limitation of NSX-T backed networks in the latest VCD version.

The second goal is showing how a network heavy use-case could be incorporated using VCD. Especially showing you which network options are available but more important what the options are and why they could be used.

For true multi-tenant routing at the T0s and load balancing at the T1s, also the edge clusters configuration need to be taken into account. Multi-tenancy VRF support was introduced in NSX-T 3.0 and is a big leap forward. For load balancing Bare-Metal edges are suited well. Maybe more to that in another post.

Before exiting I’d like to thank Daniel Paluszek and Tomas Fojta for reviewing this post, giving spot on feedback, plus lending me the drawings where I based mine upon.

Writing this post took me the longest of all since I started my blog back in November. Now its done I’m glad to be able to share it. On top of the challenging times everyone is in, the preparations for our new to build house and preparing for VCAP Design exam postponed this post considerable. I’m very happy that Covid-19 is on it’s return here in The Netherlands, the house part is on track and the exam is passed, so good times ahead 🙂

Now I’m looking forward to the vacation with my family which will start soon. I wish you all an awesome vacation and be safe.

Cheers, Daniël

4 Comments

Stéphane H. · September 11, 2020 at 9:23 am

Thank you for this article ! really helpful

I think the “VCD Shared T0 example” scheme is the same as “VCD Dedicated T0 example” or did I miss something … ?

Daniël Zuthof · September 30, 2020 at 9:23 pm

Hi Stéphane,

You are right. Thanks for your feedback. The correct image is now in the post.

Cheers, Daniel

Defmond LAW · June 18, 2021 at 4:58 pm

Hello, thx for sharing 🙂 dude. I have a question about the T0 for shared or dedicated model. Can we use VRF on T0 gateway instead of T0 by edge node ?

Daniël Zuthof · August 4, 2021 at 11:12 am

Hi Desmond,

I was quite occupied during my PTO last month. I’ll get back on this.

Cheers, Daniël