Todays post is about configuring Jumbo frames in NSX for VM to VM communication (East / West) and for upstream connectivity (North / South). NSX supports switching and routing of Jumbo frames. We’re talking about Jumbo frames when the MTU is larger than 1500 bytes. In general, Jumbo frames often are configured with a MTU of 9000 bytes, while the physical switches often have an upper limit of 9216.

The intent of using Jumbo frames is to increase bandwidth and lower CPU overhead. The downside could be a higher operational overhead due to extra configuration and a increased chance on config issues. The thing with Jumbo frames is that it needs to be enabled across the whole path of the packet.

Involved components

To support Jumbo frames in NSX, the MTU needs to be adjusted on several places, like ESXi hosts and Edge Nodes. For this post I’m assuming that a MTU of 9000 or larger is supported by the physical network already.

Let me walk you through it.

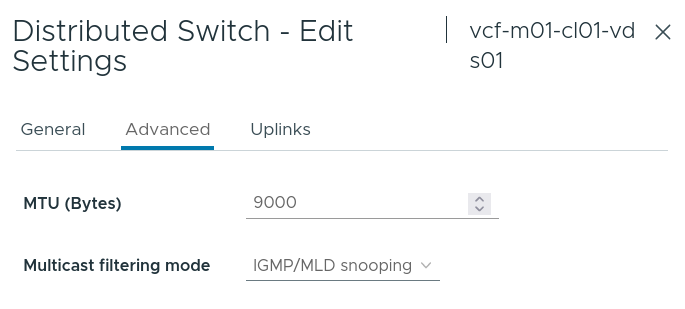

Configure MTU on vSphere Distributed Virtual Switch (dvSwitch)

First, the dvSwitch needs to support Jumbo frames. Make sure it is set to 9000. Aspects to take into account are:

- Configuring the dvSwitch MTU is a vCenter construct

- The setting defines the NSX Tunnel Endpoint (TEP) MTU for overlay segments between or within ESXi hosts

- The setting is only applicable for ESXi hosts connected to the dvSwitch (VDS)

- Do not configure the MTU size in the ESXi Host Uplink Profile in NSX Manager

- Doing so will throw errors

- Do not configure the MTU size in the ESXi Host Uplink Profile in NSX Manager

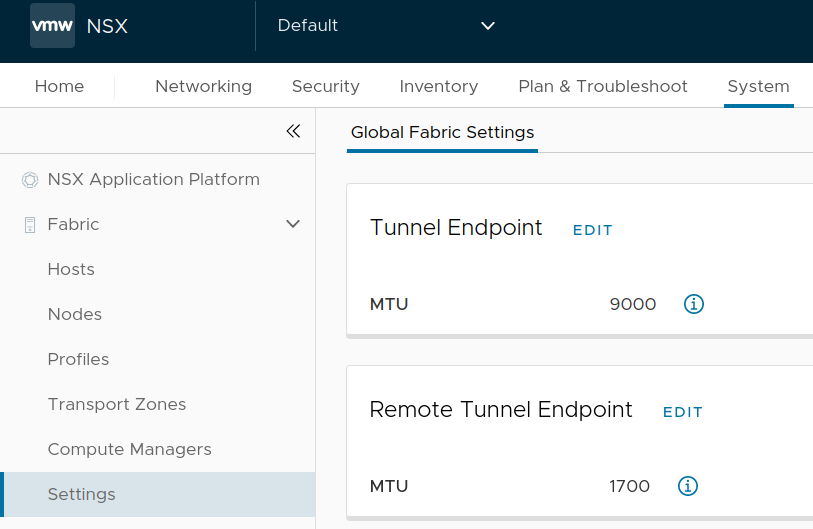

Global Tunnel Endpoint (TEP) MTU

Secondly, the Global Tunnel Endpoint (TEP) MTU needs to be configured. Aspects to take into account are:

- Configuring the Global TEP MTU is a NSX Construct

- Configured via: System > Fabric > Setting > Global Fabric Settings > Tunnel Endpoint

- This setting defines the TEP MTU for overlay segments between, to and within Edge Nodes

- This setting is only applicable to N-VDS based Transport Nodes, like Edge Nodes

- Not applicable to ESXi because they are VDS based, not N-VDS

- Global Tunnel Endpoint MTU needs to be set to 9000.

- MTU setting needs to be equal to the dvSwitch MTU

- MTU setting is automatically inherited by the Edge Node Uplink Profile

- No need to configure optional MTU in Edge Node Uplink Profile, which is not recommended

- TEP traffic cannot be fragmented on the physical network

- If the physical network cannot handle the 9000 MTU packet size, TEP packets are dropped and cause severe NSX outages

- Default is 1700 in NSX 4.x and 1600 in NSX 3.x

- When upgrading NSX from 3.x to 4.x the value of 1600 is automatically increased to 1700 during the upgrade process

Global Remote Tunnel Endpoint (RTEP) MTU

Third, the Global Remote Tunnel Endpoint (RTEP) MTU could be configured. However this is only relevant when NSX Federation (not in scope for this post) is used, else leave at default. Aspects to take into account are:

- Configuring the Global RTEP MTU is a NSX Construct

- Configured via: System > Fabric > Setting > Global Fabric Settings > Remote Tunnel Endpoint

- This setting defines the maximum MTU size for RTEP traffic between NSX instances for constructs like Global T0 / T1, etc.

- RTEP MTU is used by NSX Federation

- Needs to be set to the maximum MTU your WAN supports

- Default is 1700

- Set to 9000 if supported by the WAN

- RTEP traffic can be fragmented on the physical network, however not desired

- When RTEP traffic needs to be fragmented, it could cause additional latency and lower bandwidth

- Needs to be set to the maximum MTU your WAN supports

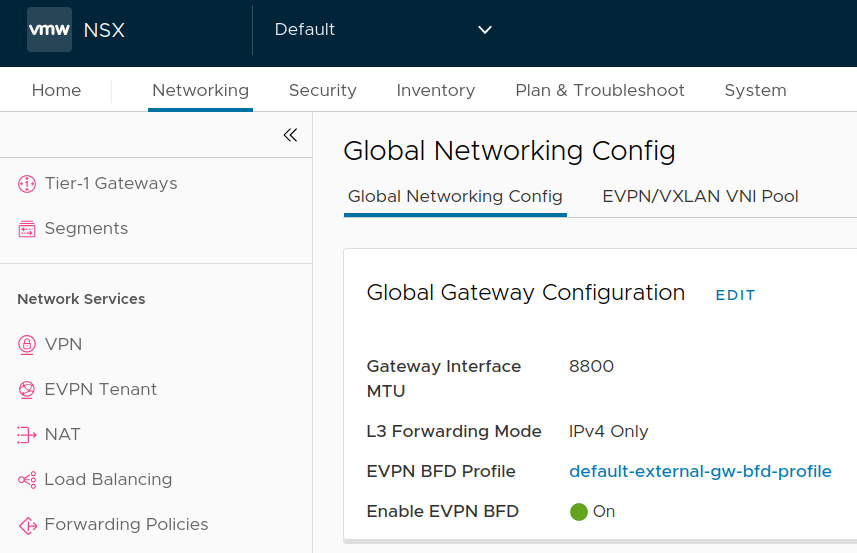

Global Gateway Configuration MTU

Fourth, the Global Gateway Configuration MTU needs to be configured. Aspects to take into account are:

- Configuring the NSX Global Gateway Configuration MTU is a NSX Construct

- Networking > Settings > Global Networking Config > Global Gateway Config

- This setting defines the maximum MTU the T0 / T1 router (SR component) gateway can handle

- The overlay protocol (GENEVE) needs 100-200 byte overhead for every packet. Therefore set the Global Gateway Config MTU to a value of 200 lower than the TEP MTU (See section 1.1 and 1.2)

- Default is 1500

- Set to 8800 when Global Tunnel Endpoint MTU is set to 9000

- The overlay protocol (GENEVE) needs 100-200 byte overhead for every packet. Therefore set the Global Gateway Config MTU to a value of 200 lower than the TEP MTU (See section 1.1 and 1.2)

Configure the VM Guest OS for Jumbo support

To use the Jumbo capabilities like higher bandwidths on VM’s that run on overlay networks, the MTU of the VM Guest OS should be set to 8800, not 9000. This is due to the additional overhead of 100 to 200 bytes for the GENEVE protocol.

Windows

The Windows NIC driver needs to support Jumbo frames. Depending on the NIC vendor implementation you can just “Enable” it or you need to fill in a value.

On my Windows 11 host and on a Windows Server VM (vmxnet3 NIC) the MTU is set by default set to 1514. 1514 you might think? In this case Windows takes the Frame Header size into account (which is 14 byte). To enable Jumbo support set the value to 8814.

On another Windows VM’s (E1000 NIC) Jumbo frames can only “Enabled” or “Disabled”. When there is only an “Enable” option in the driver, chances are the MTU is set to 9000, which is too larger and could cause frames drops etc. In those cases, leave it “Disabled” or test thoroughly.

Linux

In Linux is depends on the distro what the best way of configuring the MTU is. Ubuntu uses Netplan, while Redhat uses NetworkManager. This post is not about this topic, so I’ll briefly touch it.

Checking the current MTU:

user@host:~$ ip link show | grep mtu

...

2: enp0s31f6: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000Set the MTU to a higher value:

user@host:~$ ip link set enp0s31f6 mtu 8800

user@host:~$ ip link show | grep mtu

...

2: enp0s31f6: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 8800 qdisc fq_codel state UP mode DEFAULT group default qlen 1000This way you can instantly set the MTU to a higher value and perform some tests. This does not make the MTU reboot persistent. For that use the preferred network config tool of your Linux distro like, Netplan or NetworkManager.

Implementation scenarios

Basically traffic can passes the NSX stack in 3 ways. With or without Edge Node and with or without physical upstream networking involvement. Let’s show what needs to be configured in every scenario.

| Scenario | Edge Nodes required | Scenario description | Where enabled |

| VM to VM Layer 2 traffic within a single overlay segment | No | Non routed Layer 2 traffic | Guest OS dvSwitch Physical network |

| VM to VM Layer 3 traffic using distributed routing via two (T0 / T1) connected segments | No | Routed Layer 3 traffic, routing performed by DR component of the source ESXi host | Guest OS dvSwitch Physical network |

| VM to VM Layer 3 traffic using non-distributed routing via two (T0 / T1) connected segments | Yes | Routed Layer 3 traffic, routing performed by SR component of T1 / T0 (statefull services configured) | Guest OS dvSwitch Physical network Global Tunnel Endpoint (TEP) Global Gateway Configuration |

| VM to physical host traffic, where physical host is somewhere on the physical network | Yes | Routed Layer 3 traffic, routing performed by SR components of T1 / T0 (passed upstream to physical router and host) | Guest OS dvSwitch Physical network Global Tunnel Endpoint (TEP) Global Gateway Configuration T0 Upstream router Physical host |

The most basic scenario look like this.

Performance

Looking at the NSX 4.2.1 Reference Design Guide it shows that when Jumbo frames are enabled at the ESXi, NSX, VM and Physical network, the bandwidth can increase substantial.

In the example a 2,5x increase in bandwidth was observed using iperf between VM’s running on different ESXi hosts. Iperf was configured to use 4 sessions, while the ESXi host used 40 Gbps enabled NICs. In both tests the platform was enable for Jumbo frames, only the VM MTU config was changed.

To conclude

For multiple use-cases configuring the use Jumbo frames in your NSX environment can make a difference in bandwidth. A couple of use-cases that quickly come to mind are VM agent level backups, file servers, high bandwidth upstream routing.

Configuring Jumbo are only a part of the tuning you can do in your NSX environment. Check the excellent “NSX Reference Design Guide” by Luca Camarda and white paper “VMware NSX-T Performance considerations on Intel platforms” by Samuel Kommu which both provide guidance about tuning your environment.

Useful links

Blah.cloud: How to test if 9000 MTU/Jumbo Frames are working

NSX – Performance Testing Scripts

Pktgen – Traffic Generator powered by DPDK

VMware: NSX 4.2.1 Reference Design Guide by Luca Camarda

0 Comments